Hard Problems for Robots: Embodied Cognition

Why touching one's toes is harder than writing a novel.

This AI critique is the first in a series that discusses unresolved hard problems for artificial intelligence. This chapter focuses on embodiment: appropriately using a physical body to perform tasks.

Much recent AI-related anxiety is a consequence of AI getting better at generating media. Because ChatGPT and Midjourney excel at simulating the internet discourse on which they were trained it can seem to a severely online human as if these models are generally intelligent. In fact, these models are hopeless at even basic biological intelligence. Show me the ChatGPT powered grass-touching robot!

How to move your body.

Suppose you want to reach out and pick up a pen on your desk.

This is a fairly complex behavior. You’ll need to understand what a pen is, what a desk is, and which parts of your visual stimuli correspond to those different objects. You’ll need to distinguish the pen parts of your environment from the not-pen parts and measure the distance between your hand and the target. You’ll need to be sure to inhibit closely related behaviors, like picking up a cup, or writing with a pen. These processes are complex enough to basically involve the entire brain. However, we’re focused on the final common pathway so we can start in the prefrontal cortex (PfC):

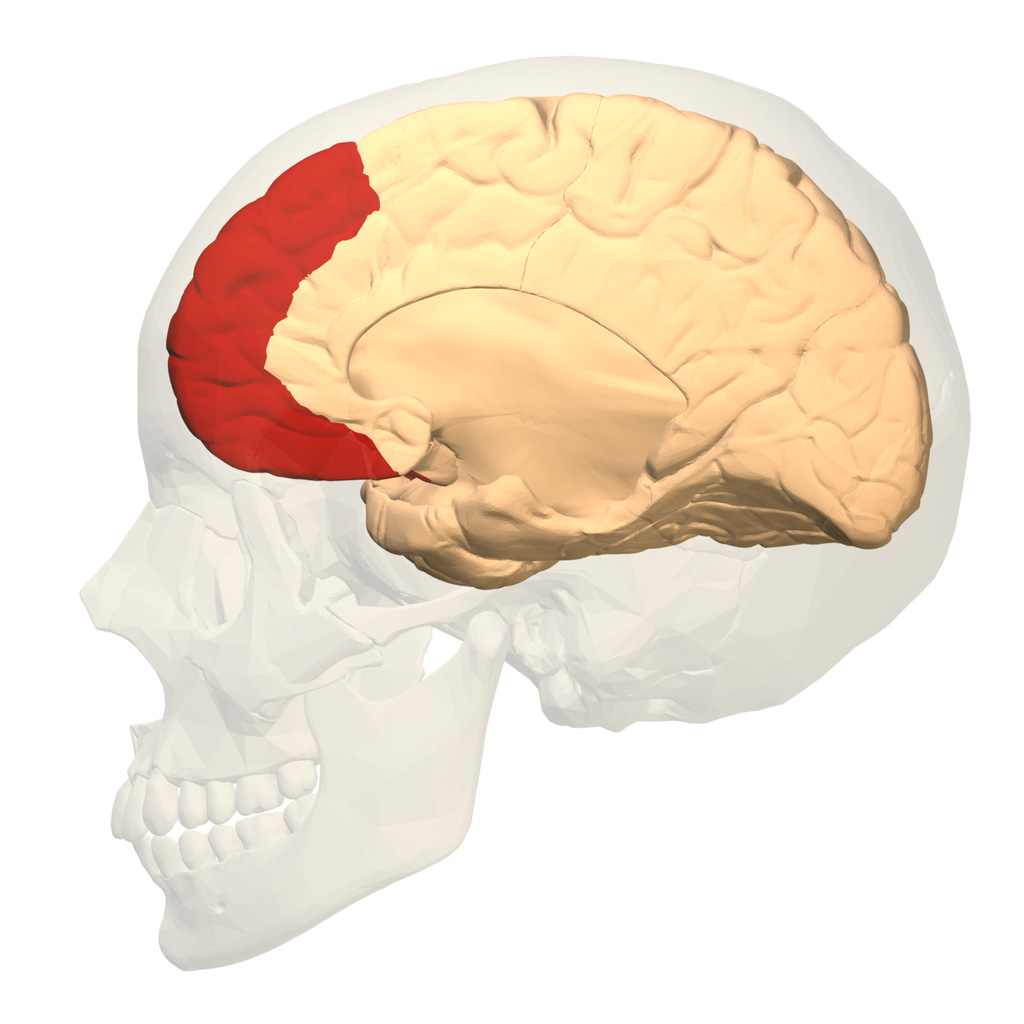

The PfC is where this stew of competing concepts is being represented in an abstract way. Next we’ll need a system that can focus in on the specific target of grabbing a pen. These structures are mostly in the basal ganglia embedded deep in the brain:

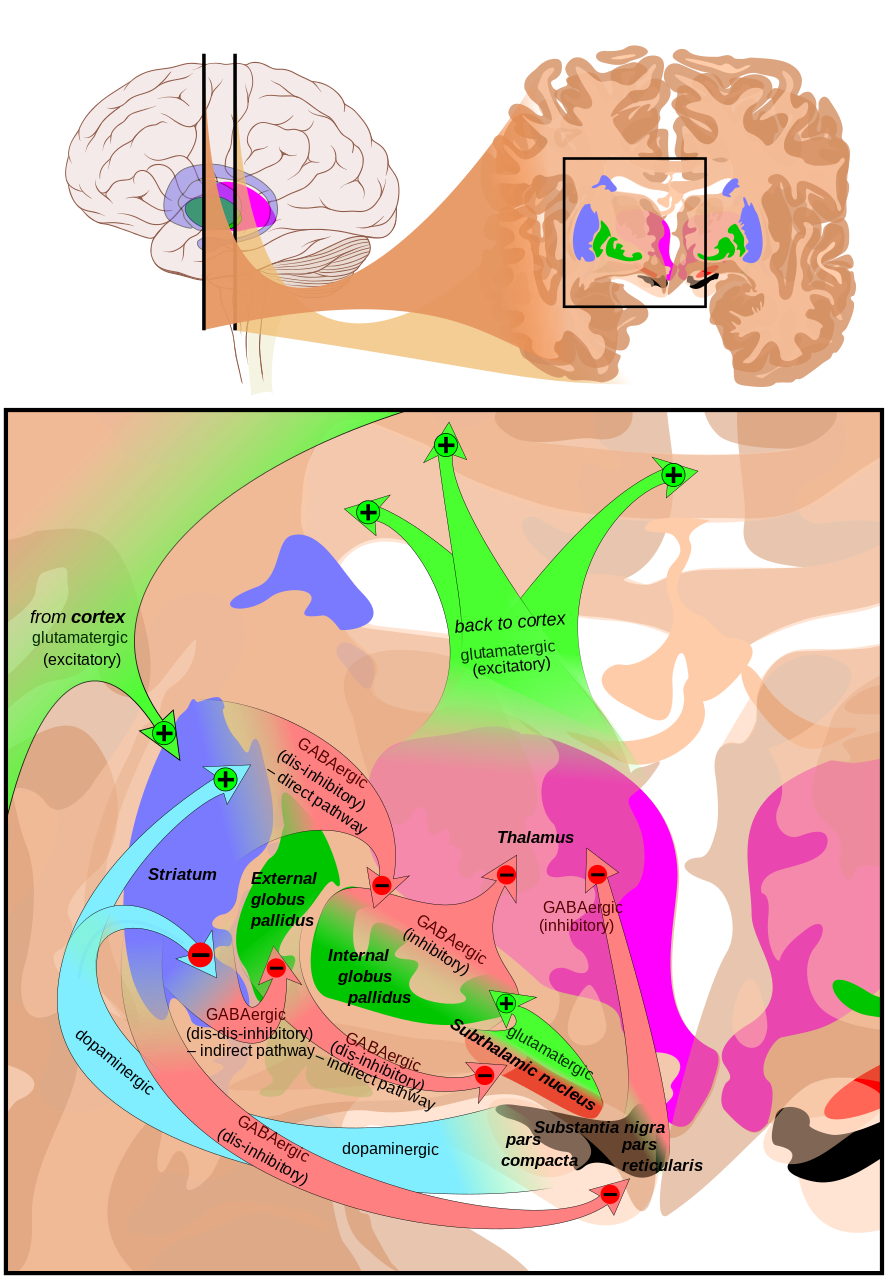

These structures contain fibers that turn on or off other brain regions. Using a pretty clever system of go/no-go logic gates they can shut down or activate the different pen/not pen representations in the PfC. Once the right configuration is amplified and the wrong ones are inhibited, the pen-reaching behavior can be passed off to the motor regions of the brain:

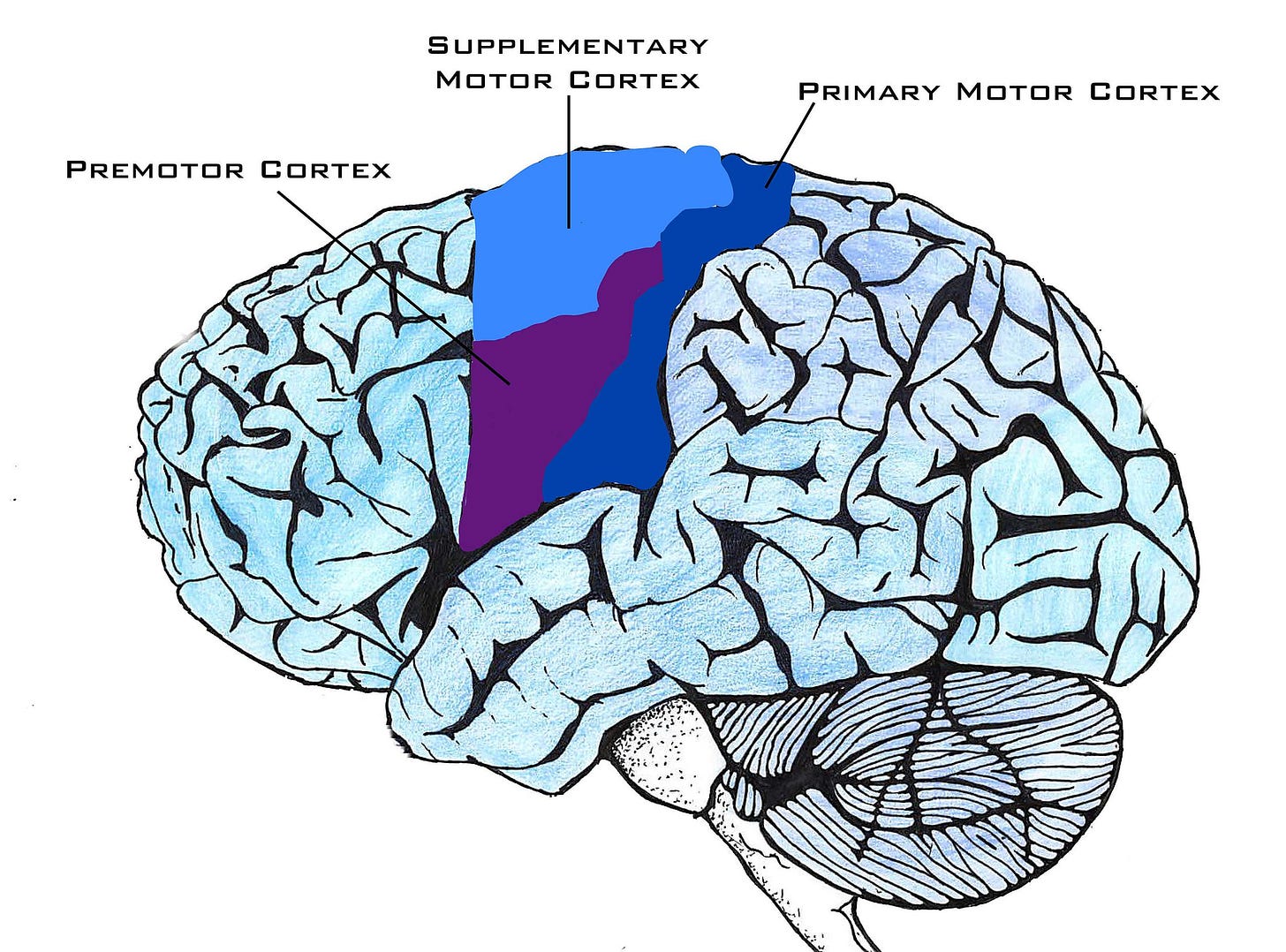

The premotor, supplementary motor, and primary motor cortices work out the specific details of what motor program the body should run to actually carry out the reaching.

But we’re not actually done. In fact, we’re still about six or seven synapses from the hand! We know we’re reaching for a pen, and we even know we’re going to use our hand to do it, but we still need an entire additional brain to carry out the computations to make that reach.

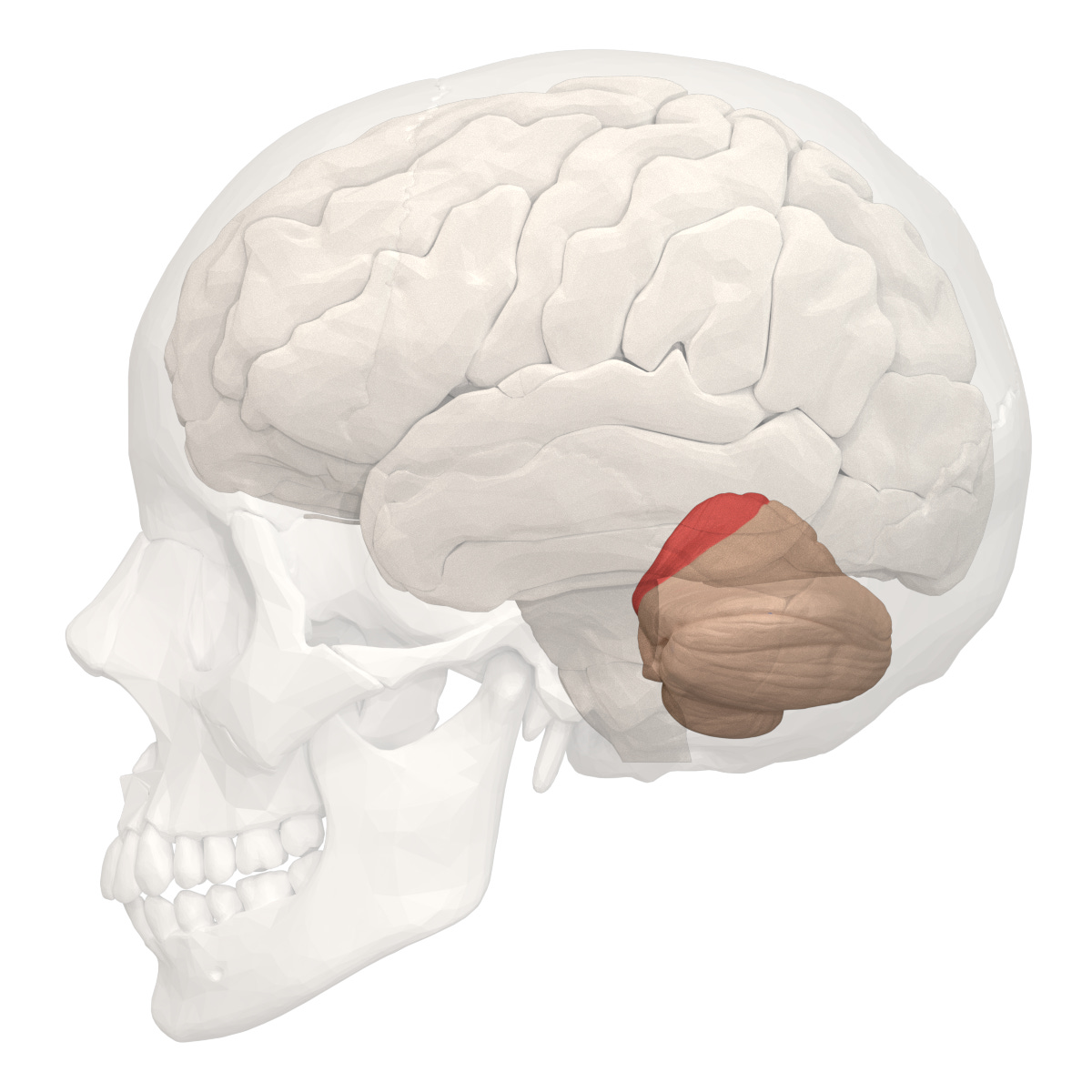

Did you know you have a second, smaller brain in your brain that’s just for moving your body? It’s the cerebellum, which you might know as the little wrinkly guy hanging off the back of the brain:

The cerebellum does a number of things, but arguably it’s most important role is translating motor plans into actual algorithms for moving muscles. Think of this processing like doing a coordinate substitution. Your eye looks down and sees the pen at a certain angle relative to your head, but the direction your hand has to move in is at a different angle. So all the math your motor cortex did to figure out how to reach for the pen is wrong unless you have a hand growing out of your eyeball. The cerebellum can do the translation to shift the eye’s frame of reference to the hand’s frame of reference. It will also do all the scut work necessary to change all of the visual angles to joint angles. Your arm is not a tentacle. You don’t just have to reach in the right direction, you have to use your muscles to set each joint to the proper position. Roboticist call these muscle movements “forward transforms,” and figuring out the right ones for mechanical arms is a real bear.

We’re still not done! The cerebellum can get us more or less to the point where our hand is at the right place and our fingers are closing in on the pen, but the precise amount of force applied, and the tiny shifts in tension on finger muscles that allow us to hold on if the pen starts to slip have nothing to do with the brain at all. Those are mostly spinal reflexes. The nerves in the hands and spine automatically make micro adjustments in response to sensory feedback from the touch receptors in the hand. This is not to say the brain doesn’t know about those adjustments—it does! It gets tons mechanoreceptive and proprioceptive feedback and it constantly adjusts its plans in response to this data. But the loop between brain and hand takes a quarter of a second. If the hand waited for the brain to respond to a slippery pen, we’d drop stuff.

And this is basically why AI can’t draw hands.

Why can’t AI draw hands?

Everyone knows that AI can’t draw hands. But why is it so bad? There must be tons of pictures of hands.

This video indulges in the most common, wrong explanation: human brains are especially attentive and sophisticated in how they process hands. This is garbage. It’s cargo-cult neuroscience at best. The brain does devote a ton of real-estate to hands, but in sensory-motor regions not visual ones. Besides, human brains devote even more space to faces and in both sensory-motor and visual processing areas. Yet AI faces are comparatively pretty good—the best way to detect them is to check for Balenciaga cheekbones. In any case this explanation gets us nowhere. Saying that humans dislike AI hands because they are sensitive to depictions of hands is begging the question.

Besides, the explanation of hands fails to answer another critical AI art question: why does Batman have three legs?

AI struggles with depictions of bodies because bodies are highly configurable. It’s valid for Batman’s leg to be in either position depending on where he is in his stride. The model needs to make a tricky judgement call about how to render the image. This is a technique that cartoonists play with as well: it’s valid to draw Batman with what appears to be three legs so long as you add some motion blur to suggest movement.

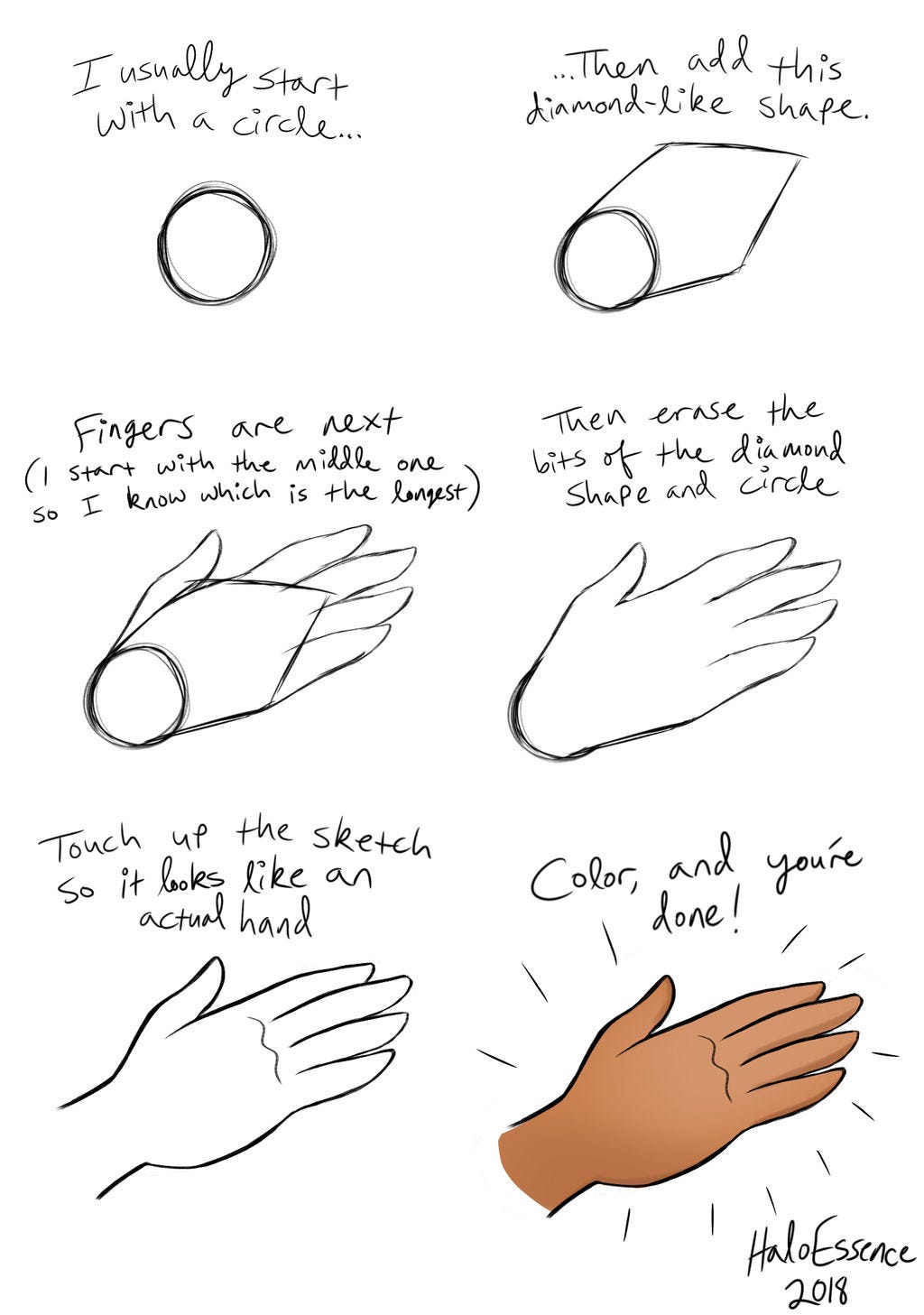

Hands are even more configurable than feet. There are many valid positions for fingers, and they move a lot. Both stable diffusion models like Midjourney and Transformer based models like DALL-E draw by inferring continuations of edges and forms. First, the model draws the average of many hands—a blob—and then refines that blob by adjusting with data from more specific contexts. In other words, they draw a hand a lot like this:

Another thing to notice is how ordinary language usually does not describe physical body positions. If I prompt DALL-E by saying “draw an artist” I haven’t specified if they are holding a pencil, and I certainly haven’t specified if they’re using a dynamic tripod or palmer supinate grip to hold that pencil. If you do go out of your way to use a specific prompt like “Draw a left hand, as viewed from above. it should have exactly five fingers, the joints of each finger should be held straight.” You get a pretty good rendering. Except that it’s a right hand.

It’s really difficult to describe specific configurations of physical bodies with language! In fact, it would be awfully nice if I had a real physical hand attached to me that I could use as a reference. Even better if I had a huge system of neural processing with tons of specialized processing units dedicated to how to configure that hand.

How to compute with your body.

Much of human (and animal, and robot) cognition is embodied. That is, the reasoning and representations used by biological brains refer to the specific sensory-motor assemblies of our physical bodies. Because we have a body we usually don’t need to learn explicit representations for how bodies work—we can use our own body as a simulation.

If I want to imagine how a hand looks when holding a pen, I can just pick up a pen and use my own body as a reference. I’m so familiar with my body that I usually don’t even need to go that far, the configuration is immediately intuitive. I’m so plugged into the physics of my own body, that I can run physical simulations using that system effortlessly and save all of the computation that I’d normally need to use my brain for. A computer scientists might call my body a “specialized quantum computer purpose-built for physics simulations,” and that would be an accurate, albeit awkward way of describing the state of affairs.

Some embodied processing is pretty obvious: controlling a robot body accurately obviously requires a pretty elaborate representation of that body. But some is more subtle—our bodies are loaded with sensory-motor feedback loops that allow us to do reposition our grip without thinking and perceive subtle textural differences. How broadly this embodied processing contributes to human cognition is a subject of debate. There are folks who argue that nearly every aspect of human thought depends on some kind of embodied processing—One example that particularly sticks with me is the claim that we understand fluid dynamics because we take baths—but even if you don’t go as far as metaphor it’s inarguable that having a body is a really handy for many kinds of real-world predictions.

All of this creates some fairly substantial challenges for AI. It’s one thing to draw a hand by configuring pixels on a screen, it’s much harder to draw a hand using a hand. If AI is going to have meaningful impact in the physical world it will need learn to control real physical bodies. And there are some strong reasons to believe that the way that biological bodies work is about as good as it gets. Superior robot embodied cognition is never going to happen.

“Never” is a pretty strong claim that deserves some unpacking. After all, what about this robot bartender? What about these dancing robots?

Don’t get me wrong, these applications are super awesome, and definitely count as AI controlling bodies under challenging circumstances. The single-foot balance at 1:38 probably made some robotics engineering students fall out of their chairs. This is Boston Dynamics showing off, and they deserve it. But there’s some sleight of hand here. These robots are running a specific list of macros in controlled conditions. They have millimeter precision for this dance routine and this invites the audience to imagine that in highly similar circumstances—with a different dance, with a slightly slippery floor—they would also perform well. When in fact, this performance is extremely brittle and would fail catastrophically if there was a light breeze.

Non-uniform bodies

Think back to the complicated transforms the cerebellum had to estimate to translate reaching for a pen to actual joint angles. Now imagine that the pen was always exactly 10 cm directly to the right of the center of your hand. That would be way easier! You could always run exactly the same reaching program. Sure it would be a complicated list of numbers to translate between eyes and hands, but the numbers would always be the same. There wouldn’t be any calculation, just lookup.

That’s exactly what Boston dynamics is doing with it’s dancing robots. Those movements are big look-up tables that say things like “set the knee joint to 45 degrees for 3 seconds.” Current robotic systems mostly work by running lists of specific movement macros that are tightly adapted to the specific task. These macros often don’t even include feedback sensors other than a measurement of how hard the servomotors are working. Occasionally coupled with accelerometery from gyroscopes. This is beginning to change! Roboticists are rapidly working to develop new sensors, materials, and tools that produce a better sense of the position of their machines in space. It’s also not entirely clear that this needs to be solved in exactly the same way as biological systems do. While we use lots of sensors embedded in our joints and muscle tissue many robots use a cloud of infrared light to estimate the position of their limbs. That’s a clever alternative.

But these sensory-motor advances still have a major blind spot: they assumes that the body doesn’t change.

The brain not only has to make calculate new transforms to cover a huge diversity of circumstances, but it also has to re-estimate whenever the body changes. If you attempt to apply a model fit for one body to a different one the model will make very predictable errors. You probably experienced this yourself if you ever went through a major growth spurt. Suddenly you were very clumsy as your body tried to reach out for things with arms slightly longer than your brain was used too. And everyone experiences this at birth because the brain has no idea what kind of body it’s going to end up in before that body has actually grown itself. That’s a big part of the reason the brain has so many partially redundant systems and sensor-motor feedback loops. When you’re working with a self-assembling system produced by evolution it’s got to work. It’s got to work, buddy or you’re dead. It’s ok if it’s a little clumsy, but it can’t fail catastrophically like this:

As Rodney Books at MIT famously pointed out embodied sensory-motor loops are hard to build, and they’re evolutionary older than anything cognitive. It’s not an insurmountable challenge, but it took evolution billions of years to make body sensors, and only a few hundred million to get from there to brains capable of calculus. The fact that AI has stumbled into competence at calculus and language is not evidence of sophistication in walking. We should be realistic about the difficulty of the embodied engineering challenge.

I think the engineering for embodied cognition is currently happening. There are technologies currently being developed to create sophisticated physical robots. The same kind of statistical, generative models that work for text and images can also work for embodied systems and have been applied in some limited circumstances. There are a couple of serious, but surmountable stumbling blocks. First, the amount of motor data that has been collected and digitized—such as joint position, muscle load, and other proprioceptive information—is trivial compared to the amount of digitized text and images. Second, the motors and sensors that generate and collect such data are incredibly primitive relative to keyboards and cameras. Lidar scanners and embedded kinesthetic sensors will probably have to be common place before we get really robust dancing robots.

But even when we do create the requisite sensors, collect the necessary data, and train the right models, we’ll still be in a position very much like biological systems. No matter how good your model is, it only works for the body it learned to control. A different body requires a different model, or at least some fine-tuning to adapt what was previously learned to the new instantiation. Tiny differences in friction in joints lead to extremely clumsy fingers. After a few months of wear, robots will have to re-learn. Human manual dexterity is an absolute marvel in the animal world and it’s hard to learn, it takes 15-20 years before kids aren’t completely clumsy. And that skill only lasts about 50 years before the joints start to accumulate damage and freeze up.

Real brains for a real world

All of this still only just scratches the surface of what embodied cognition really means. For humans and animals the most important sensory-motor loops are actually chemical. Olfaction and gustation are the obvious ones, but more important are probably the internally-facing sensors that measure blood glucose, blood pressure, hydration, electrolytes, circulating lipids, temperature, and so on.

Most critical of these are probably nociceptors—pain detectors. I had a colleague at UIUC who got a multi-million dollar humanoid robot that he hoped to teach to speak using deep neural nets and natural language processing models. It was a very cute little guy named “Bert.”

Bert spent the first three years of his life trying not to rip off it’s own very expensive arms. The millions of dollars did not go toward an embodied pain sense. And take it from people who don’t experience pain: life without pain is hell.

Ironically, humans extreme body competence makes them somewhat poor judges of AI capabilities. Drawing with a pen is tremendously more difficult than simply coloring pixels (which is why indie game devs love pixel art). Handwriting is more difficult than typing (no one reading this blog could decipher my handwriting). Because generative AI produces high-quality images and text, we tend to assign it some of our own body competence. It’s a strange sort of reverse-Turing test, where human’s ingratitude toward the miracle of their own body leads them to dramatically overestimate the sophistication of an AI without a body.

Moving bodies is a hard problem. There’s a ton of awesome, heavy-duty engineering going on in this space. It’s a great area to get into now because this is likely to be a decades- or centuries-long effort. And at the end of that work we will still have imperfect robot bodies that need constant repairs and fine-tuning, just like real bodies do. Because they exist in the real world and the real world full of rough parts, slippery parts, and sharp edges.

It’s objectively far, far harder to pick up a pen than it is to write code. We just don’t value it because it’s the kind of boring miracle that any healthy kid can do.